On UI test automation

In a recent Twitter interaction, I was pushing Alan Page to clarify a key poing on his anti-UI-automation thread. I didn’t get it, and there have been a few more responses such that I wanted to make my current thinking and position clear. I tweeted this as well, but here’s the thread for those not following on Twitter. It can also be seen as an extension of my series on test strategy.

Simply –

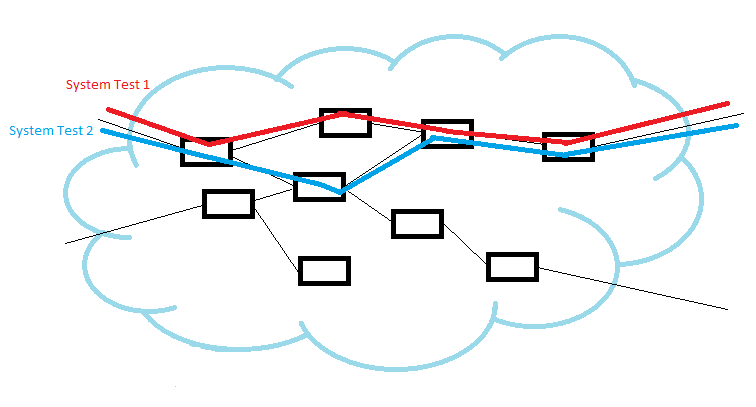

Full-stack system tests, executed through the UI. yield a lot of information because they will touch a lot of the system. The tradeoff is precision. That is, they tell you something didn’t work but will rarely tell you much about why and you will have to dig. They quickly become inefficient in terms of coverage.

If you need coverage quickly, use them. If you find yourself using them to get detailed information, look for how much redundancy they have compared to other tests. Are they executing parts of the system touched by other tests? If yes, start thinking about how you can move to smaller, more focused tests.

FTW, think about how you can turn your end-to-end tests into monitoring so they run all the time. Think about how you can move your unit-level checks to be assertions so they run all the time everywhere. To do this, you need to think about building automation/tooling as component pieces of test design, data, execution, inspection, evaluation and reporting. Try to avoid duplicating any of this stuff.

There are tooling gaps that make this hard, but a lot can still be done.

The foundational model for me when forming an overall approach to test strategy is that there are two strawman test approaches as starting points.

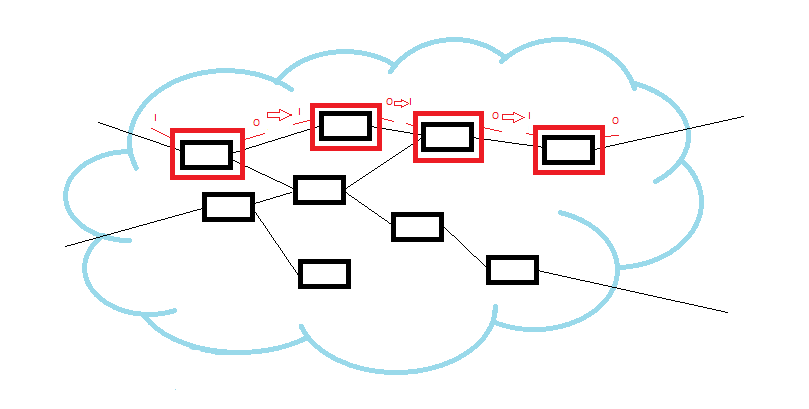

The first is that you could, in theory, test the entire system through a collection of unit tests. In practice, we don’t do this because at every interaction between methods or classes (or services in a SOA/microservice world) or components, you need to duplicate information. That is, to emulate a system test, you need to start with a unit test at the system boundary, then you need to take the output result of that unit test and pass it as the input to some other unit test. Then you need to do this all the way down the chain to the final output at the system boundary. In practice, this is unmanageable, so it really doesn’t happen.

Tools like PACT are an attempt to deal with the fact that at each interaction, there is redundancy. With PACT, you can at least maintain each interaction only once, but I’ve heard stories of teams that struggle with the maintenance of contracts. This happens when a part of the system interacts with a lot of services and the interfaces are not stable. Things can be working fine for most people, but some teams can be forced into a lot of maintenance. This is a symptom of pursuing the above strawman approach.

The second option is to execute all tests full stack, through the external system boundary (which could be a UI, batch interface or APIs). This has the upsides of being reflective of real world use, and you can get broad coverage quickly. This is the default strategy for getting quick coverage of a legacy system with poor testing. It has downsides in that there may be parts of the system that are impossible to cover (uncovering bugs later), the information yielded by the tests unlikely to be precise and these tests will typically take longer to run due to setup costs and startup costs of the environment. Managing data is also likely to be more complicated, and tests quickly start to duplicate coverage.

Every component in Test 1 is touched for the first time. For the second test, covering one new class or method requires touching three that have already been covered.

In practice, we find teams pursuing a mix of strategies, not necessarily consciously, but generally in response to problems and challenges encountered pursuing a strategy heavily weighted toward one of the strawman approaches. That is, as system (or end-to-end) test execution slows, they increase unit test coverage. If teams are unit-test heavy, bugs will tend to be missed due to misalignment of expectations at the unit boundaries, and teams compensate with broader tests.

To duplicate the system test, the output of each unit test must be the input of another unit test. This creates a risk of error and a different kind of redundancy which you would need good design to avoid.

In the middle, we have the usually-vaguely defined ‘integration tests’, an in-between approach of tests broader than unit-level but smaller than full-stack system tests.

All of these approach have a weakness in that they tend to build on a functional model of the product. If we want to maximise our ability to find problems, we benefit from working from multiple models (eg. State, workflows, data). This is probably too much to cover in this post, so I’ll leave it at the test scope discussion for today. Also untouched for now is how to move these tests to be continuous, and the role of UI tests in a lot of modern microservice architectures.

One comment on “On UI test automation”